Recent Projects

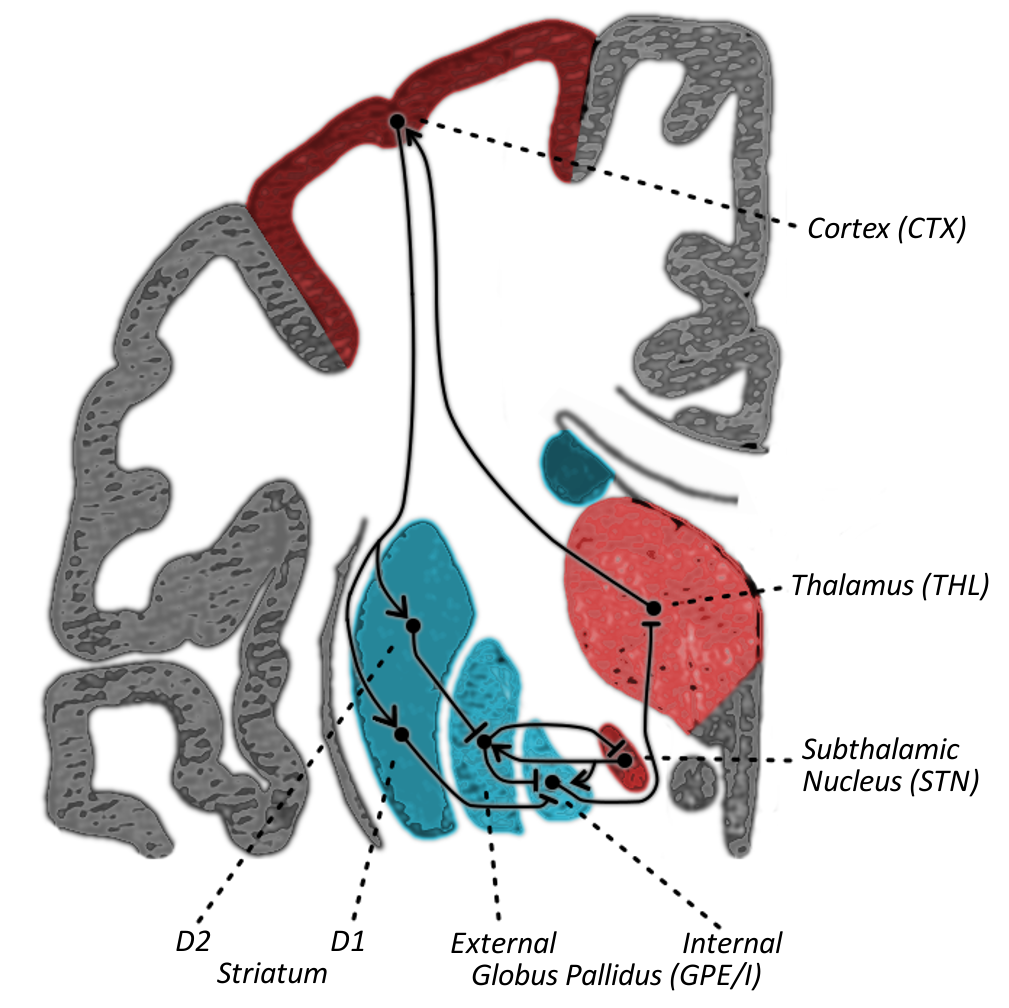

Emergent Oscillations in Brain Networks

Past models of basal ganglia circuits emphasize reinforcement learning, but fail to explain oscillations in the basal ganglia that vary across tasks and conditions. We built a spiking neural network model that explains the emergence and variation over time of basal ganglia oscillations. The model depends on spike synchronization to produce oscillations with similar properties to human brain waves. The model can exhibit either strong oscillations or strong selection, which is important for carrying out decisions, and it can rapidly switch between these states. These results are consistent with an emerging perspective on how oscillations in the brain provide an active substrate for communication, allowing information to spread quickly to all the regions where it is needed.

Collaborators: Dave Noelle, Anne Warlaumont, Ramesh Balasubramaniam

Manuscript in preparation | Poster @ SfN 2017 | github

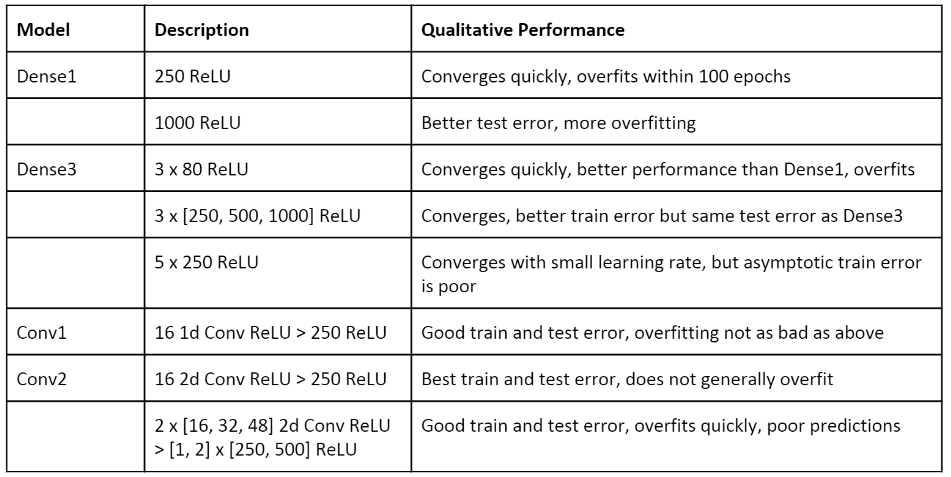

Deep Learning + Robot Arm Simulation

Learning a motor transfer function for a simple, 3-joint arm--as opposed to the dozens of skeletal joints and hundreds of independent muscles of the human arm--is a difficult problem. Physical constraints, friction, non-linear torque scaling, and many other factors contribute to complexity in the relationship between a motor command and the controlled endpoint. I trained deep neural networks in tensorflow to predict the position of the hand from a motor command for a simulated robot arm. I found that convolution and high dropout rates yielded the best performing solutions, but predictions of the hand position still tended to diverge over 1 - 2 seconds from the actual position, indicating that a closed feedback loop almost certainly required to model this complex, dynamical system.

Embodied Word Learning in Virtual Reality

We developed a new experimental method to study how sensorimotor interaction influences word learning. Using Unreal Engine and the HTC Vive, we taught participants novel words for six potions. In the forthcoming paper, we describe how participants' interactions with the potions shape their later responses to those novel words. This provides new evidence that language is active: it describes the ways our bodies interact with the world.

Collaborators: Chelsea Gordon, Ramesh Balasubramaniam, Dave Noelle

VR Toolkit |Manuscript in preparation

Modeling Infant Speech Development with Spiking Neural Networks

We connected a spiking neural network model to an articulatory synthesizer--a computational simulation of a human vocal tract--to investigate the ways that auditory feedback might shape motor control for speech. We found that very simple reservoir style networks are capable of learning to shape the vocal tract to produce moderately syllabic sounds (i.e. rhythmic opening of the jaw to produce "Ogg-Ogg-Ogg").

Advisor: Anne Warlaumont

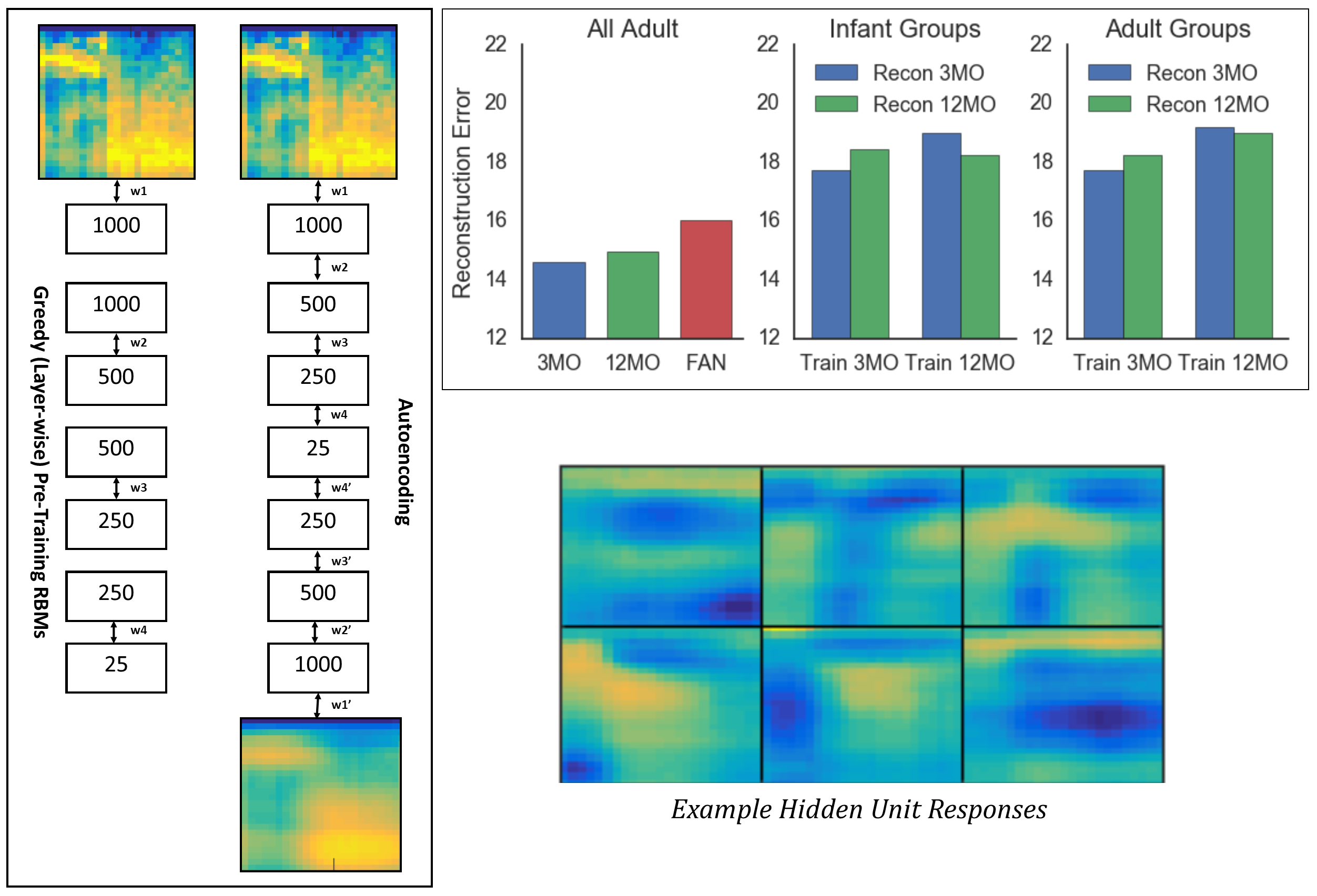

Deep Autoencoding of Naturalistic Infant and Parent Vocalizations

We adapted a deep auto-encoder network to reconstruct naturalistic infant and parent speech sounds. We then used the model to explore similarity between the sounds produced at different ages, and mapped the responses of hidden layer units to visualize the underlying structure of the dataset.

Collaborators: Anne Warlaumont, Chris Kello, Dave Noelle, Gina Pretzer, Eric Walle